In a network or cluster of elements, the System Data Synchronization feature keeps programming data, such as Interconnect Handling Restrictions, Feature Access Codes, and Class of Service Options, identical at each element.

Without the System Data Synchronization (SDS) feature, you would need to log into each element and manually program the data to be the same, or program a master database and then restore it to each element. Then, you would need to make all future modifications of system data on each element to keep the network and/or cluster element databases in sync.

The SDS feature reduces the time required to set up and manage networks and/or clusters of 3300 ICPs by allowing you to

compare the data in a programming form of one system against the data in same form on another system.

start sharing system form data among a network or cluster, or group of elements (3300 ICP systems)

synchronize the form data from a master element across the forms on the other elements within the

network,

cluster, or

element group (for example, an Administrative Group).

maintain the telephone directories on all the elements in the network or cluster (see Remote Directory Number Synchronization).

After a network or cluster has been set up with SDS, all adds, modifications, and deletions to the data that you have designated as shared are automatically distributed to the other elements in the network or cluster at the specified scopes.

In resilient configurations, SDS also synchronizes the data of resilient users and devices between primary and secondary controllers. After you set up data sharing between the primary and secondary controllers, the application automatically keeps user and device data, such as feature key programming and DND settings synchronized between the primary and secondary controller regardless of whether the changes are made on the primary or secondary controller:

If resilient users modify their set programming while their sets are being supported on the primary controller, SDS automatically updates the secondary controller with the changes.

If resilient users fail over to their secondary and modify their set programming, SDS updates the primary controller with the changes after the primary controller returns to service.

If an administrator updates resilient user and device data on either the primary of secondary controller, SDS automatically updates the database of the other controller.

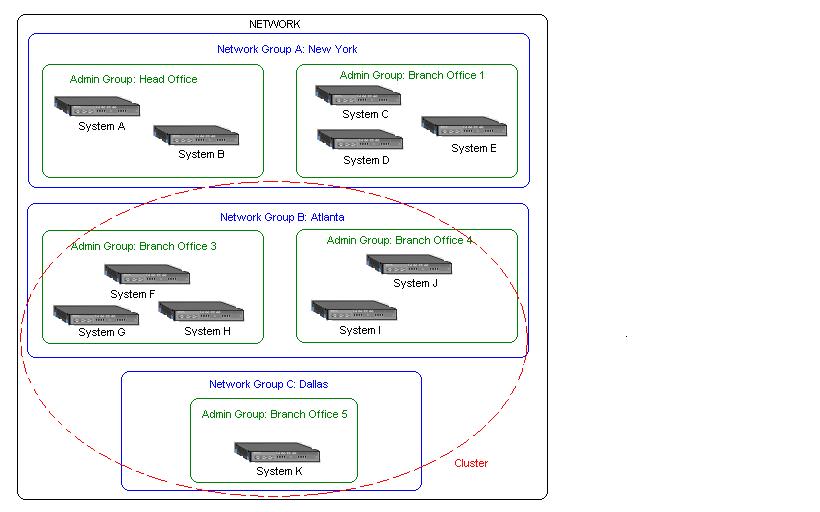

The network, the highest grouping, encompasses all the member elements. Within the network, you can create groups of elements and use SDS to share data among the elements in the groups. There are three different types of groups:

network groups

administrative groups, and

clusters.

Network groups are sub-groups of elements within the network. Administrative groups are sub-groups of elements within a network group. Each element must belong to at least one administrative group and each administrative group must belong to a network group.

A cluster is a separate group of elements within the network that shares a common telephone directory. An element may or may not belong to a cluster. There is no relationship between clusters, and network groups or administrative groups. An element that belongs to a cluster can belong to multiple administrative groups and network groups. As well, elements that belong to administrative groups or network groups can belong to multiple clusters.

Note:You can assign elements to clusters and administrative groups. However, in MCD Release 4.0, all elements belong to one Network Group. In a future release, a form similar to the Admin Groups form will be added to allow you to manage Network Groups.

The following illustration shows the group hierarchy:

SDS allows form data to be shared among 3300 ICP elements at the following scopes:

All Network Elements: distribute updates to all network elements that support System Data Synchronization (this option is for future use when SDS will support other non-3300 ICP elements).

All 3300 ICPs: distribute updates to all 3300 ICPs in the network only (this setting will not distribute data to non-3300 ICP elements).

Network Group Members: distribute updates to all elements that belong to the network group. A network group is a sub-group of elements within the network of elements. You typically use network groups to share data among groups of elements at the regional level.

Admin Group Members: distribute updates to all elements that belong to the administrative group. Use administrative groups to share data among groups of elements within a network group.

All Cluster Members: distribute updates to all 3300 ICP cluster elements.

Resilient Pair: distribute resilient user and device data between a primary and secondary controller.

Member Hosts: distribute group information and group member data (for example, cluster pickup groups information and cluster pickup group members) between all the elements on which the group members reside. This sharing scope does not apply to network hunt groups or resilient ACD hunt groups.

Hospitality Gateway and Guest Room Host: distribute updates to all 3300 ICP elements in the hospitality cluster.

Hospitality Gateway and Suite Host: distribute updates to all 3300 ICP elements in the hospitality cluster.

None: do not distribute the form data.

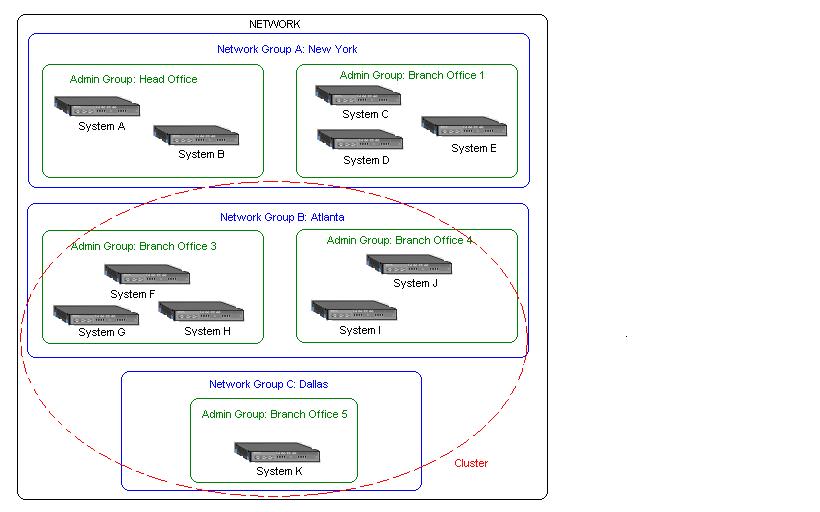

The following illustration shows an example of Feature Access Codes (FAC) being shared at the network scope, Class of Service (COS) settings being shared at the network group scopes, and Call of Restriction (COR) settings being shared at the administrative group scopes.

In the following illustration

all systems (A, B, C, D, E, F, G, and H) share FAC data at the network scope

systems A, B, C, and D share COS data at the network group members 1 scope

systems E, F, G and H share COS data at the network group members 2 scope

systems C and D share COR data at the administrative group 1 scope

systems C and D share COR data at the administrative group 2 scope

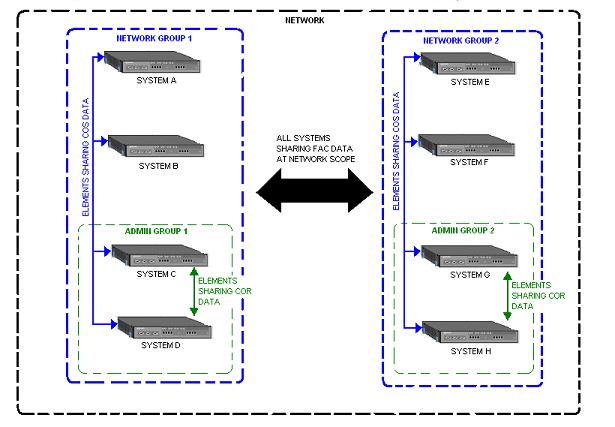

The following illustration shows an example of Feature Access Codes (FAC) being shared at the network scope while the Class of Service (COS) settings are shared at the cluster scope only. In the following illustration

systems A, B, C, and D share FAC data at the network scope

systems A and B share COS data at the cluster scope

system C and D share COR data at the cluster scope

In the above example, SDS would be configured such that

COS changes on system A would only be distributed to system B; and COS changes on system B would only be distributed to system A

COS changes on system C would only be distributed to system D; and COS changes on system D would only be distributed to system C

FAC changes on any of the four systems would be distributed to all other systems (A, B, C, and D).

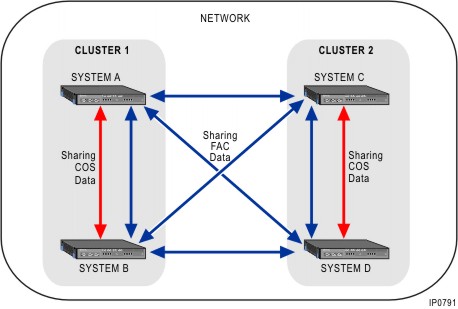

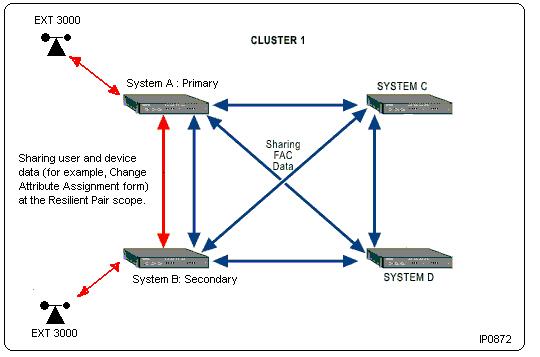

At the resilient pair scope, SDS shares the selected device data, user data, and user-managed data between the primary controller and the secondary controller. In the following example, SDS would be configured such that

User and device changes on system A (primary) would only be distributed to system B (secondary)

User and device changes on system B (secondary) would only be distributed to system A (primary).

For example, if the user at extension 3000 programs a feature access key on his or her set while on the primary controller (System A) , SDS distributes the programming update for the new key to the user's secondary controller (System B), but not to System C or System D. Likewise, if the user's set is on the secondary controller (System B) and the user programs a feature access key, SDS distributes the programming update for the new key to the user's primary controller (System A), but not to System C or System D.

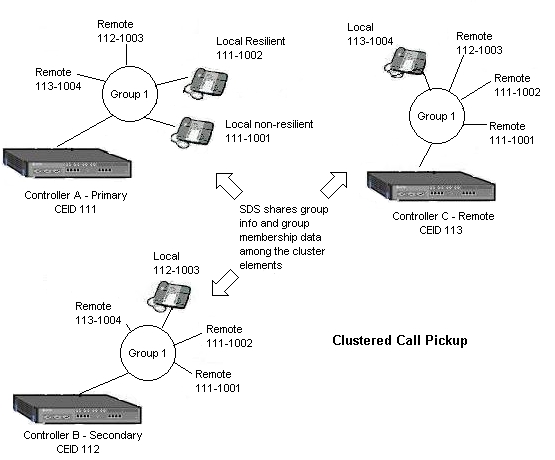

At the member hosts scope, SDS shares the group information and group membership data between the elements in a cluster. In the following example, SDS maintains the call pickup group information and call pickup group membership on the cluster elements.

What Data Can Be Shared?